|

| Two time series and their cross-correlation functions |

The cross-correlation function (CCF) is a measure of similarity of two time series as a function of the lag of one relative to the other. The CCF can be imagined as a procedure of overlaying two series printed on transparency films and sliding them horizontally to find possible correlations. For this reason, it is also known as a "sliding dot product."

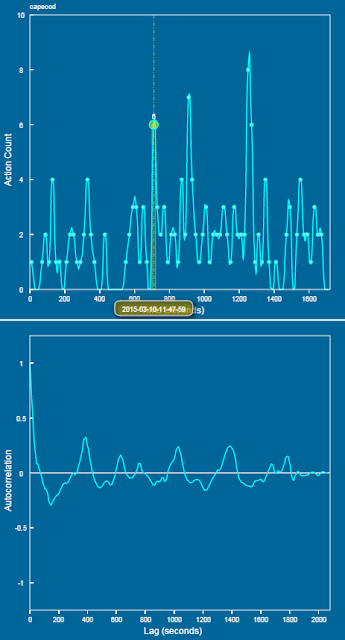

The upper graph in the figure to the right shows two time series from a student's engineering design process, representing about 45 minutes of her construction (white line) and analysis (green line) activities while trying to design an energy-efficient house with the goal to cut down the net energy consumption to zero. At first glance, you probably have no clue about what these lines represent and how they may be related.

But their CCFs reveal something that appears to be more outstanding. The lower graph shows two curves that peak at some points. I know you have a lot of questions at this point. Let me try to see if I can provide more explanations below.

Why are there two curves for depicting the correlation of two time series, say, A and B? This is because there is a difference between "A relative to B" and "B relative to A." Imagine that you print the series on two transparency films and slide one on top of the other. Which one is on the top matters. If you are looking for cause-effect relationships using the CCF, you can treat the antecedent time series as the cause and the subsequent time series as the effect.

What does a peak in the CCF mean, anyways? It guides you to where more interesting things may lie. In the figure of this post, the construction activities of this particular student were significantly followed by analysis activities about four times (two of them are within 10 minutes), but the analysis activities were significantly followed by construction activities only once (after 10 minutes).