The National Science Foundation (NSF) has awarded Bowling Green State University (BGSU) and Concord Consortium (CC) an exploratory grant of $300 K

to investigate how chemical imaging based on infrared (IR) thermography

can be used in chemistry labs to support undergraduate learning and

teaching.

Chemists often rely on visually striking color changes shown by pH, redox, and other indicators to detect or track chemical changes. About six years ago, I realized that IR imaging may represent a novel class of universal indicators that, instead of using halochromic compounds, use false color heat maps to visualize any chemical process that involves the absorption, release, or distribution of thermal energy (see my original paper published in 2011). I felt that IR thermography could one day become a powerful imaging technique for studying chemistry and biology. As the technique doesn't involve the use of any chemical substance as a detector, it could be considered as a "green" indicator.

Although IR cameras are not new, inexpensive lightweight models have become available only recently. The releases of two competitively priced IR cameras for smartphones in 2014 marked an epoch of personal thermal vision. In January 2014, FLIR Systems unveiled the $349 FLIR ONE, the first camera that can be attached to an iPhone. Months later, a startup company Seek Thermal released a $199 IR camera that has an even higher resolution and can be connected to most smartphones. The race was on to make better and cheaper cameras. In January 2015, FLIR announced the second-generation FLIR ONE camera, priced at $231 in Amazon. With an educational discount, the price of an IR cameras is now comparable to what a single sensor may cost (e.g., Vernier sells an IR thermometer at $179). All these new cameras can take IR images just like taking conventional photos and record IR videos just like recording conventional videos. The manufacturers also provide application programming interfaces (APIs) for developers to blend thermal vision and computer vision in a smartphone to create interesting apps.

Not surprisingly, many educators, including ourselves, have realized the value of IR cameras for teaching topics such as thermal radiation and heat transfer that are naturally supported by IR imaging. Applications in other fields such as chemistry, however, seem less obvious and remain underexplored, even though almost every chemistry reaction or phase transition absorbs or releases heat. The NSF project will focus on showing how IR imaging can become an extraordinary tool for chemical education. The

project aims to develop seven curriculum units based on the use of IR

imaging to support, accelerate, and expand inquiry-based learning for a

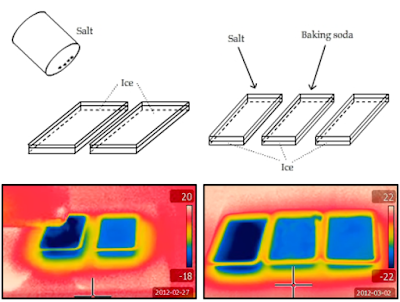

wide range of chemistry concepts. The units will employ the predict-observe-explain (POE) cycle to scaffold inquiry in laboratory activities based on IR imaging. To demonstrate the versatility and generality of this approach, the units will cover a range of topics, such as thermodynamics, heat transfer, phase change, colligative properties (Figure 1), and enzyme kinetics (Figure 2).

The research will focus on finding robust evidence of learning due to IR imaging, with the goal to identify underlying cognitive mechanisms and recommend effective strategies for using IR imaging in chemistry education. This study will be conducted for a diverse student population at BGSU, Boston College, Bradley University, Owens Community College, Parkland College, St. John Fisher College, and SUNY Geneseo.

Partial support for this work was provided by the National Science Foundation's Improving Undergraduate STEM Education (IUSE) program under Award No. 1626228. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

Chemists often rely on visually striking color changes shown by pH, redox, and other indicators to detect or track chemical changes. About six years ago, I realized that IR imaging may represent a novel class of universal indicators that, instead of using halochromic compounds, use false color heat maps to visualize any chemical process that involves the absorption, release, or distribution of thermal energy (see my original paper published in 2011). I felt that IR thermography could one day become a powerful imaging technique for studying chemistry and biology. As the technique doesn't involve the use of any chemical substance as a detector, it could be considered as a "green" indicator.

|

| Fig. 1: IR-based differential thermal analysis of freezing point depression |

|

| Fig. 2: IR-based differential thermal analysis of enzyme kinetics |

The research will focus on finding robust evidence of learning due to IR imaging, with the goal to identify underlying cognitive mechanisms and recommend effective strategies for using IR imaging in chemistry education. This study will be conducted for a diverse student population at BGSU, Boston College, Bradley University, Owens Community College, Parkland College, St. John Fisher College, and SUNY Geneseo.

Partial support for this work was provided by the National Science Foundation's Improving Undergraduate STEM Education (IUSE) program under Award No. 1626228. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.