During the 2010 FIFA World Cup eight years ago, a common octopus named

Paul the Octopus

gained worldwide attention because it accurately "predicted" all the

results of the most important soccer matches in the world (sadly it died by natural courses

shortly after that). Perhaps Paul the Octopus just got extraordinarily lucky. Eight

years later, as reported by the MIT Technology Review,

artificial intelligence has been used in its stead to predict the World Cup (which I doubt would achieve the 100% success rate as the famous octopus did marvelously).

|

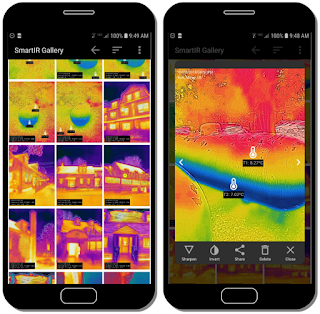

| Fig. 1: An illustration of the Octopus Algorithm |

While my research on artificial intelligence (AI) has nothing to do

with predicting which team would win the World Cup this year, octopuses

have become one of my inspirations in the past few days. My work is about developing

AI techniques that support learning and teaching through solving vastly

open-ended problems such as scientific inquiry and engineering design.

One of the greatest challenges in such problem-solving tasks is about

how to automatically generate formative feedback based on automatically

assessing student work. Typically, the purpose of this kind of feedback

is to gradually direct students to some kind of goals, for example, to

achieve the most energy-efficient design of a building that meets all

the specs. Formative feedback is critically important to ensuring the

success of

project-based learning. Based on my own experiences, however, many

students have great difficulties making progress towards the goal in the short amount of

time typically available in the classroom. Given the time constraints, they need help on an

ongoing basis. But it is not realistic to expect the teacher to

simultaneously monitor a few dozen students while they are working on

their projects and provide timely feedback to each and every one of them. This is where AI can help.

This is why we are developing new pedagogical principles and

instructional strategies, hoping to harness the power of AI to spur

students to think more deeply, explore more widely, and even design more

creatively.

|

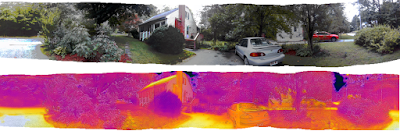

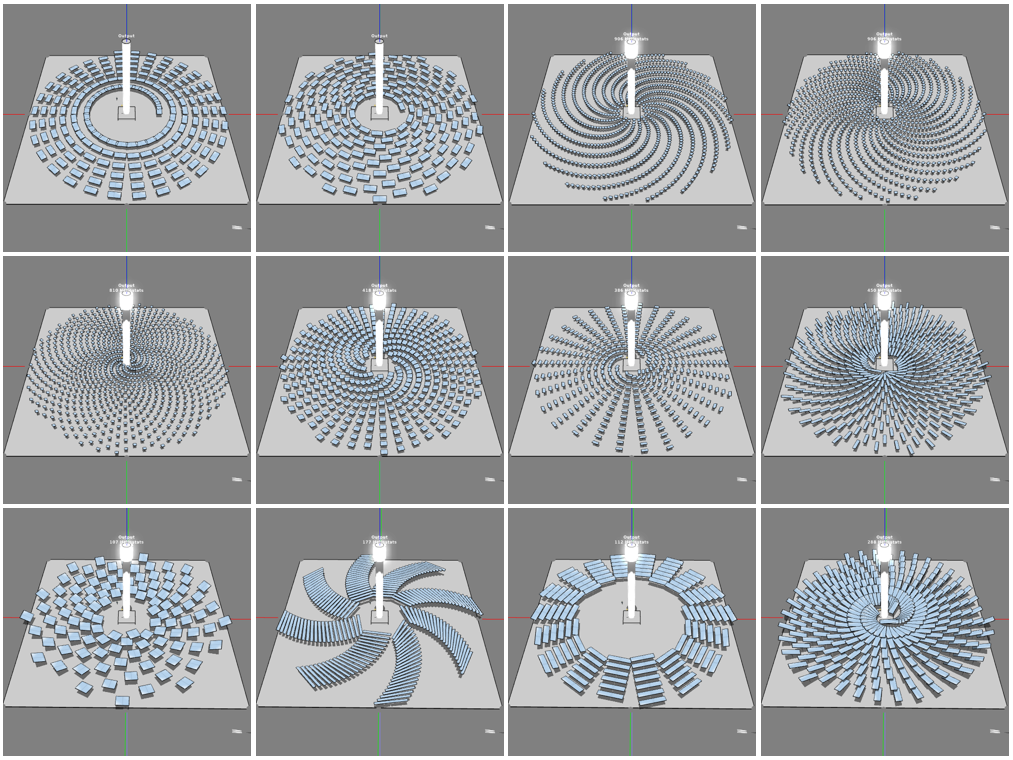

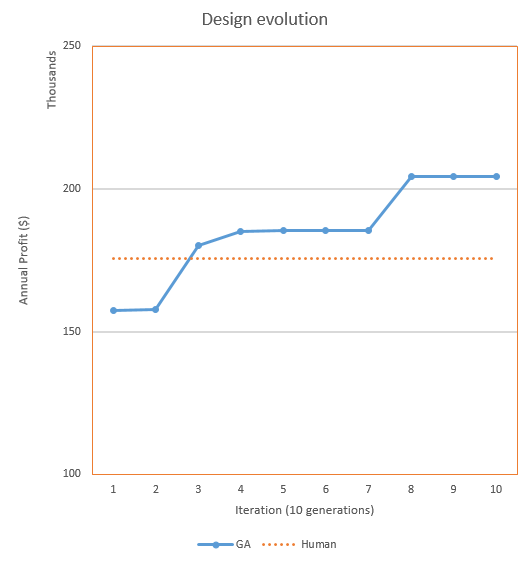

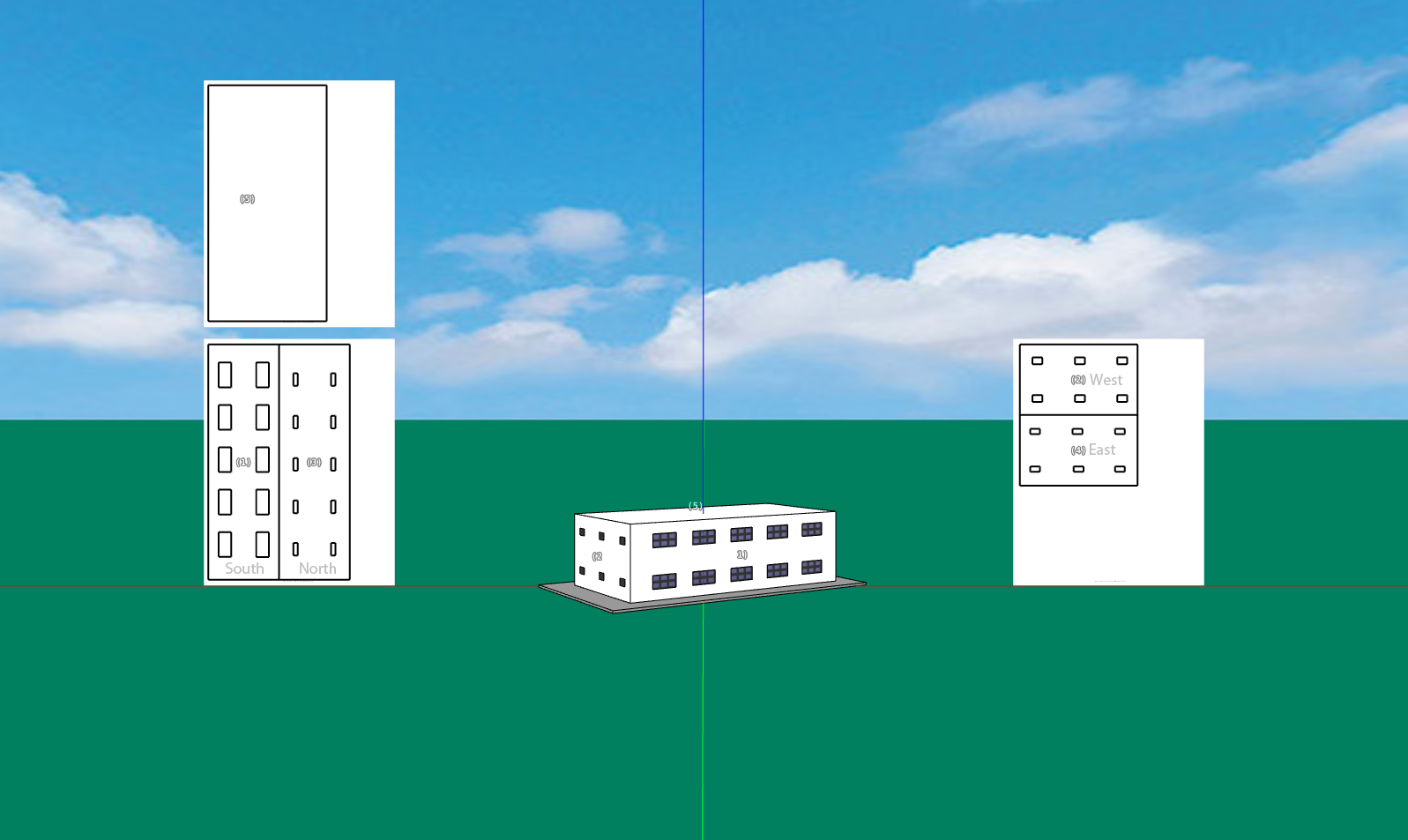

| Fig. 2: Learning from AI through a competition-based strategy |

Although this general idea of using AI in education makes sense,

developing reliable algorithms that can automatically guide

students to solve massively open-ended problems such as engineering

design is by no means a small job. Through three months of intense work

in this field, I have developed

genetic algorithms that can be used to find optimal solutions in complex design environments such as the

Energy3D CAD software, which you can find in earlier articles published through

my blog.

These algorithms were proven to be effective for optimizing certain

engineering problems, but to call them AI, we will need to identify what

kind of human intelligence they are able to augment or

replace. In my current point of view, an apparent class of AI

applications is about mimicking certain instructional capacities of

peers and teachers. In order to create an

artificial peer or even an

artificial instructor,

we would have to figure out the algorithms that simulate the constructive

interactions between a student and a peer or between a student and an

instructor.

Hence, an optimization algorithm that yields the best solution in a

long single run is not very useful to developers and educators as it

doesn't provide sufficient opportunities for engaging students. You can

imagine that type of algorithm as someone who does something very fast

but doesn't pause to explain to the learner how he or she does the job.

To create a developmentally appropriate tool, we will need to slow down

the process a bit -- sort of like the creeping of an octopus -- so that

the learner can have a chance to observe, reflect, internalize, and

catch up when AI is solving the problem step by step. This kind of

algorithm is known as

local search,

a technique for finding an optimal solution in the vicinity of a starting point that represents the learner's current state (as opposed to

global search that casts a wide net across the entire solution space, representing

equally all possibilities regardless of the learner's current state).

Random optimization

is one of the local search methods proposed in 1965, which

stochastically generates a set of candidate solutions distributed around

the initial solution in accordance with the

normal distribution.

The graphical representation of a normal distribution is a bell curve

that somewhat resembles the shape of an octopus (Figure 1). When using a genetic

algorithm to implement the local search, the two red edge areas in Figure 1

can be imagined as the "tentacles" for the "octopus" to sense "food"

(optima), while the green bulk area in the middle can be imagined as the "body" for it to "digest the catches" (i.e., to concentrate on local search). Once an optimum is "felt" (i.e., one or more solution

points close to the optimum is included in the randomly generated population of the genetic

algorithm), the "octopus" will move towards it (i.e., the best

solution from the population will converge to the optimum) as driven by

the genetic algorithm. The length of the "tentacles," characterized by the standard

deviation of the normal distribution, dictates the pace in which the

algorithm will find an optimum. The smaller the standard deviation, the

slower the algorithm will locate an optimum.

I call this

particular combination of random optimization and genetic algorithm the

Octopus Algorithm as it intuitively mimics how an octopus hunts on the sea floor (and, in part, to honor Paul the Octopus and to celebrate the 2018 World Cup Tournament).

With a controlled drift speed, the Octopus Algorithm can be applied to

incrementally correct the learner's work in a way that goes back and forth between human and computer, making it

possible for us to devise a competition-based learning strategy as

illustrated in Figure 2.

Although there are still a lot of technical details that need to be ironed

out as we make progress, this competition-based strategy essentially

represents an idea to turn a design process into some kind of

adversarial gaming (e.g., chess or Go), which challenges students to

race against a computer towards an agreed goal but with an unpredictable

outcome (either the computer wins or the human wins). It is our hope that AI would ultimately serve as a tool to train students to design effectively just like what it has

already done for training chess or Go players.

|

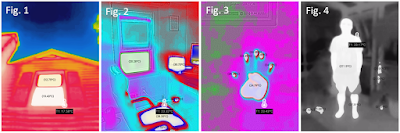

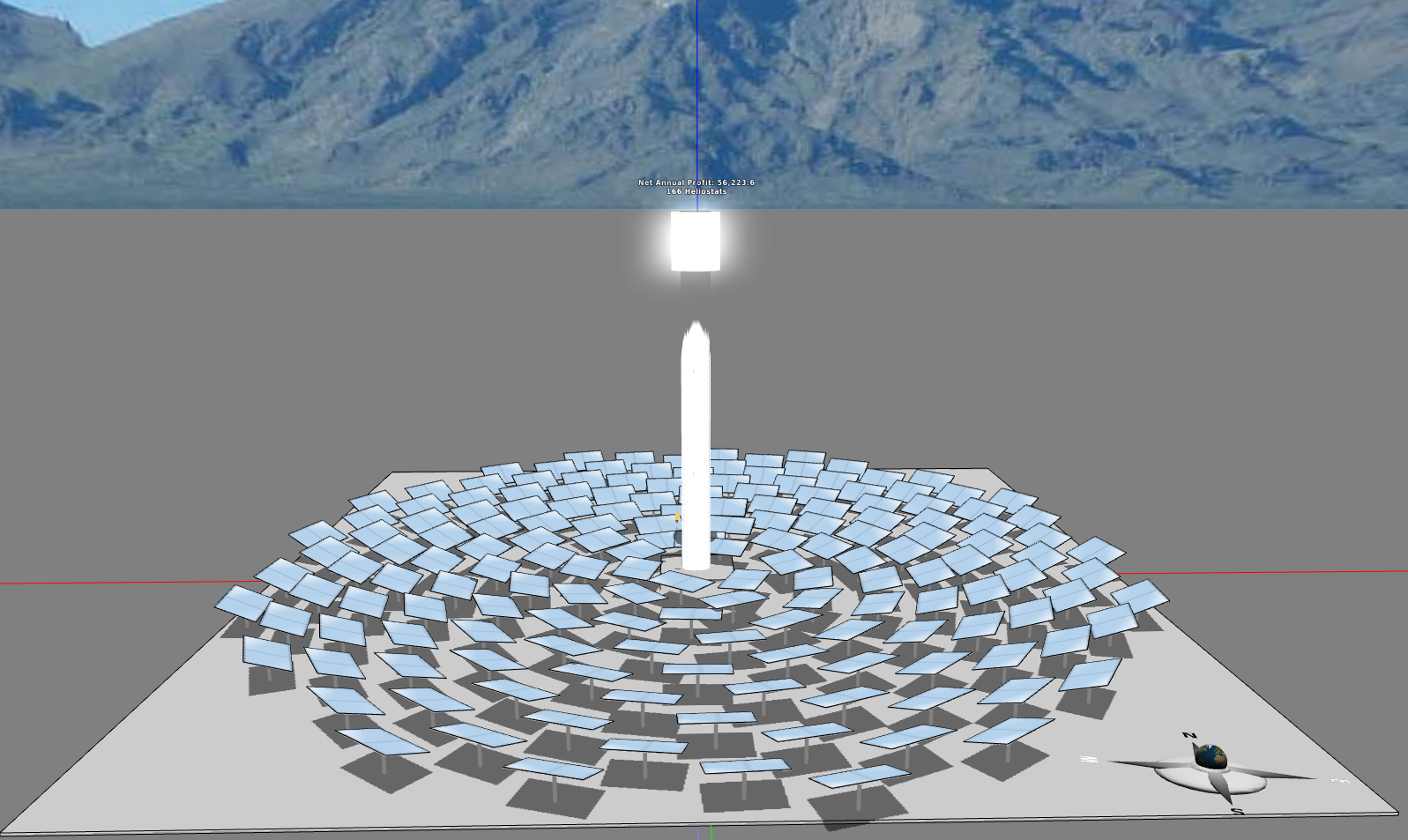

| Fig. 3: Evolution of population in the Octopus Algorithm |

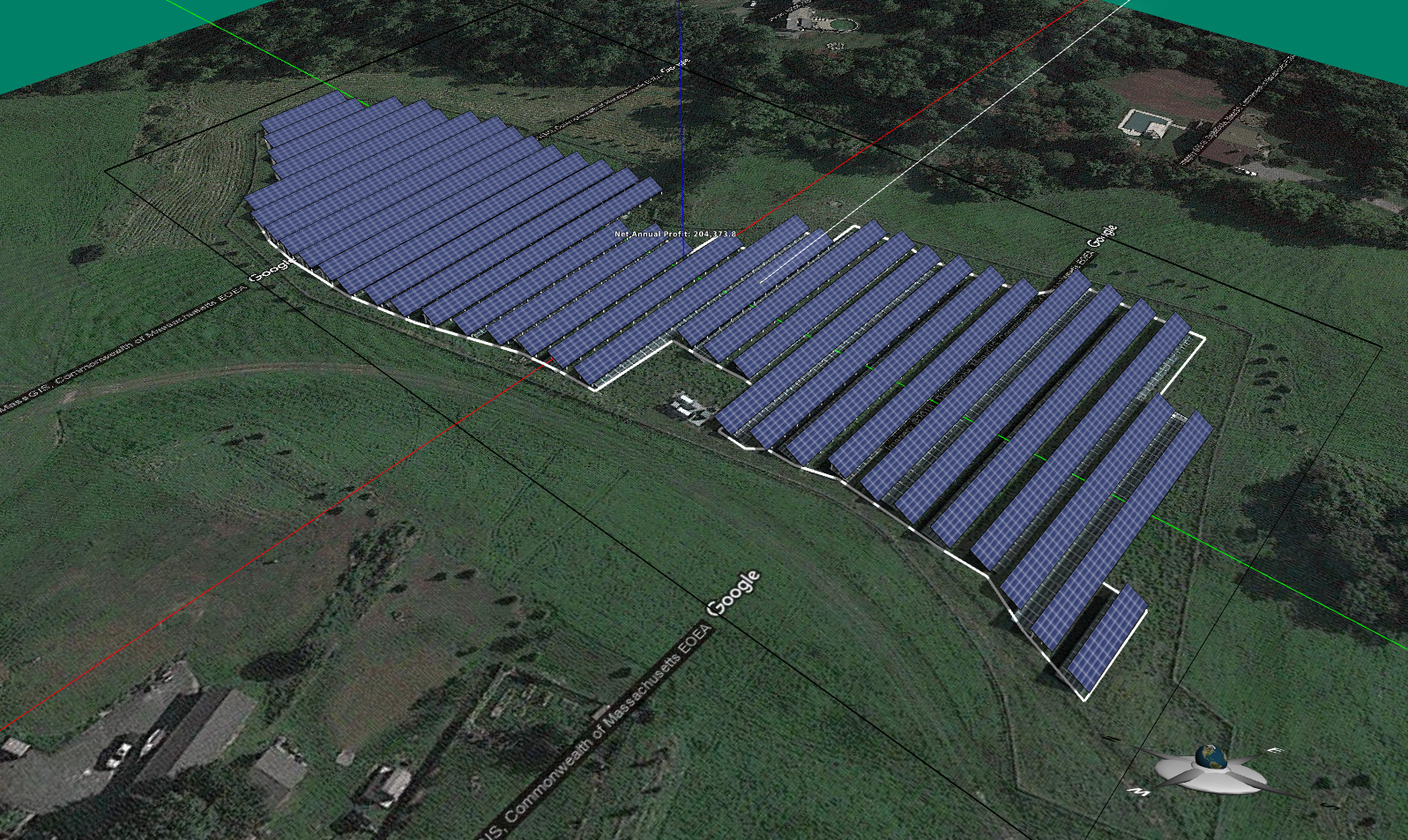

How does the Octopus Algorithm work, I hear you are curious? I have tested it

with some simple test functions such as certain sinusoidal

functions (e.g., |sin(

nx)|) and found that it worked for those

test cases. But since I have the Energy3D platform, I can readily test

my algorithms with real-world problems instead of some toy problems. As the first real-world example,

let's check how it finds the optimal tilt angle of a single row of solar

panels for a selected day at a given location (we can do it for the

entire year, but it takes much longer to run the simulation with not

much more to add in terms of testing the algorithm). Let's assume that

the initial guess for the tilt angle is zero degree (if you have no idea which way and how much the solar panels should be

tilted, you may just lay them flat as a reasonable starting point). Figure 3 shows the

results of four consecutive runs. The graphs in the left column show the

normal distributions around the initial guess and the best emerged after each round (which was used as the initial guess for the next round). The graphs in the right column show the final distribution of the population at the end of each round. The first and second runs show that the "octopus" gradually drifted left. At the end of the third run, it had converged to the final

solution. It just stayed there at the end of the fourth run.

|

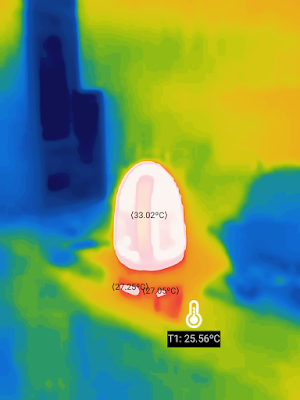

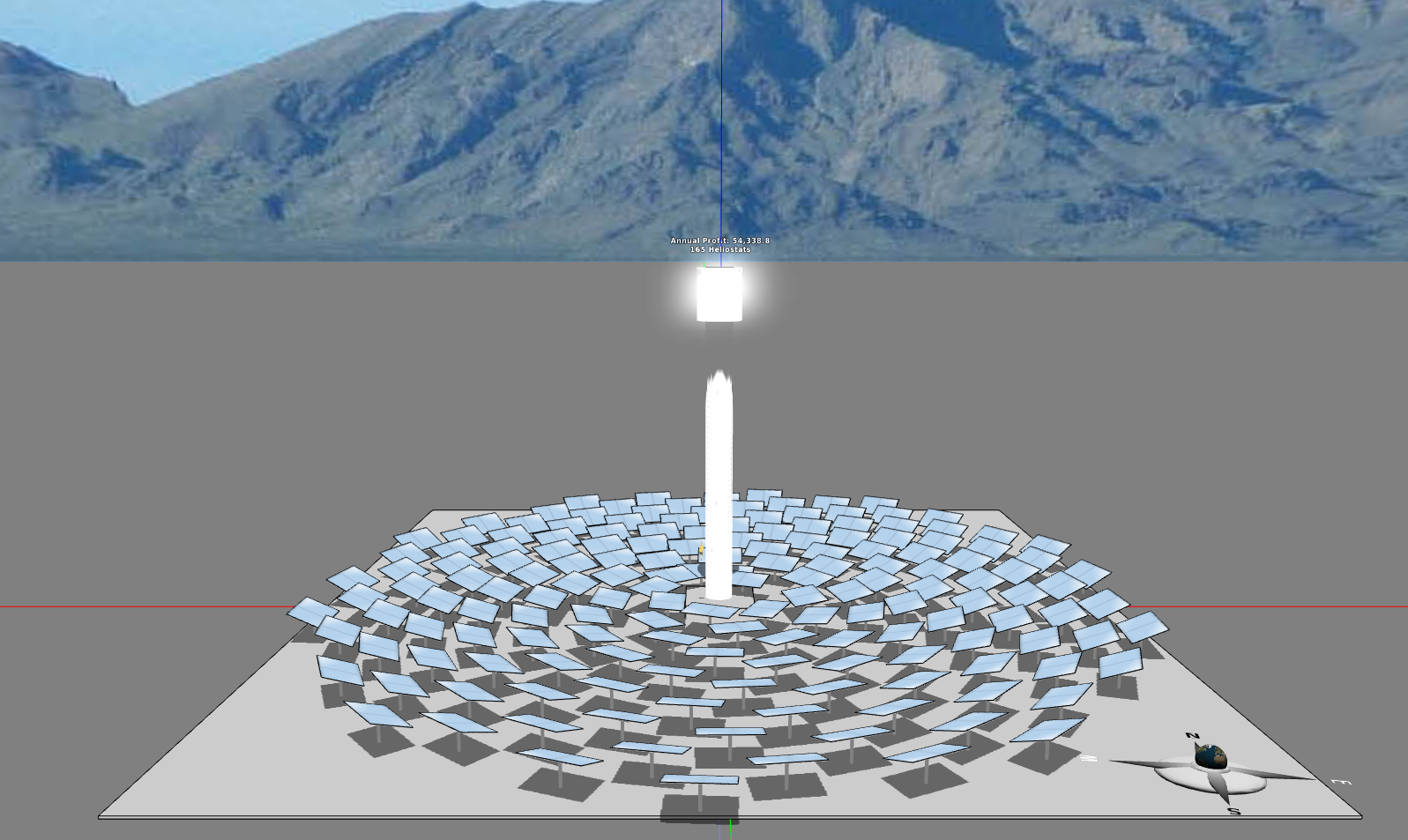

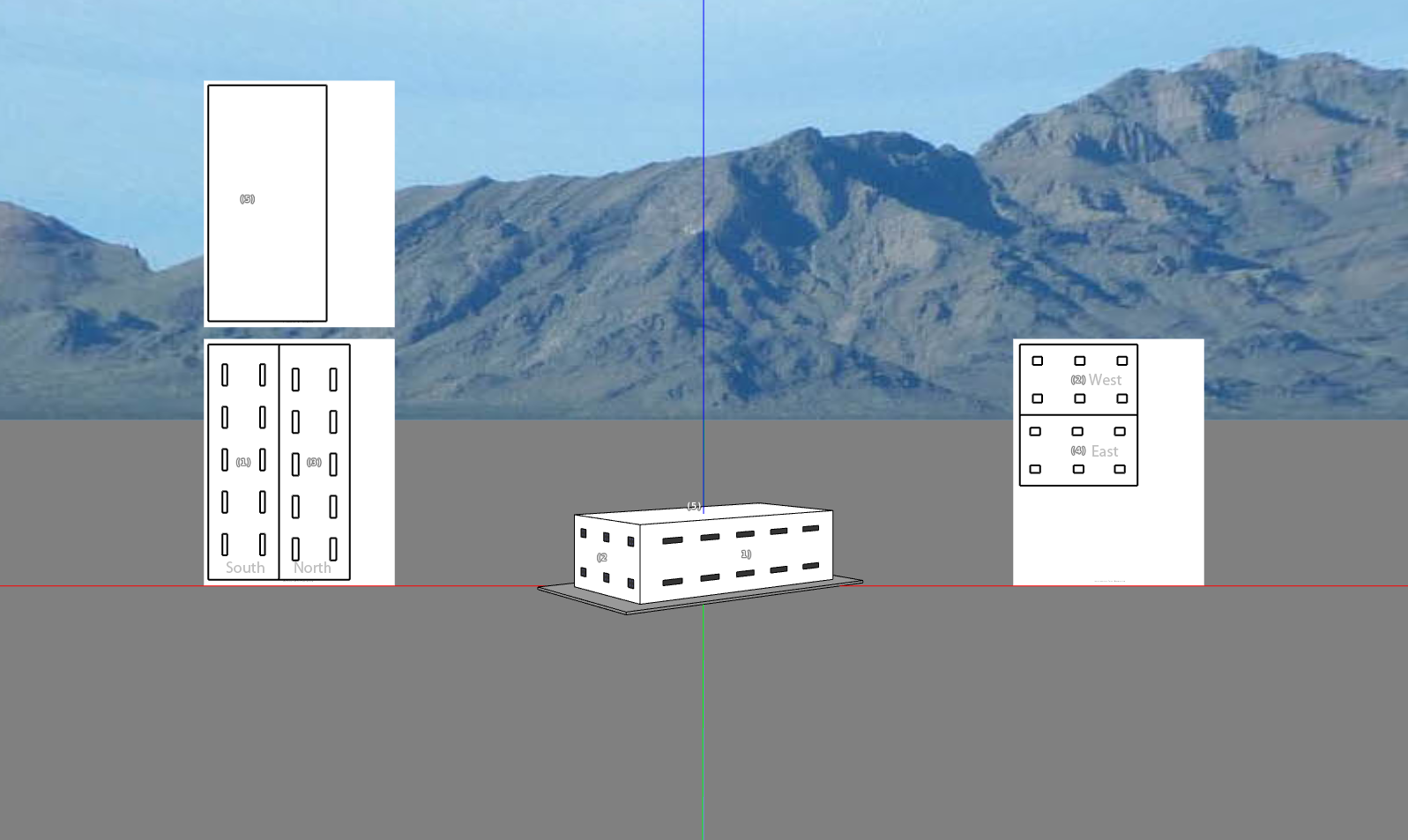

| Fig. 4: Using four "octopuses" to locate four optimal orientations for the energy efficiency of a house. |

|

| Fig. 5: Locating the nearest optimum |

When there are multiple optima in the solution space (a problem known as

multimodal optimization),

it may be appropriate to expect that AI would guide students to the

nearest optimum. This may also be a recommendation by learning theories

such as the

Zone of Proximal Development introduced

by Russian psychologist Lev Vygotsky. If a student is working in a

certain area of the design space, guiding him or her to find the best

option within that niche seems to be the most sensible instructional

strategy. With a conventional genetic algorithm that performs global

search with uniform initial selection across the solution space, there

is simply no guarantee that the suggested solution would take the

student's current solution into consideration, even though his/her

current solution can be included as part of the first generation (which,

by the way, may be quickly discarded if the solution turns out to be a

bad one). The Octopus Algorithm, on the other hand, respects the

student's current state and tries to walk him/her through the process

stepwisely. In theory, it is a better technique to support

advanced personalized learning, which is

the number one in the 14 grand challenges for engineering in the 21st century posed by the National Academy of Engineering of the United States.

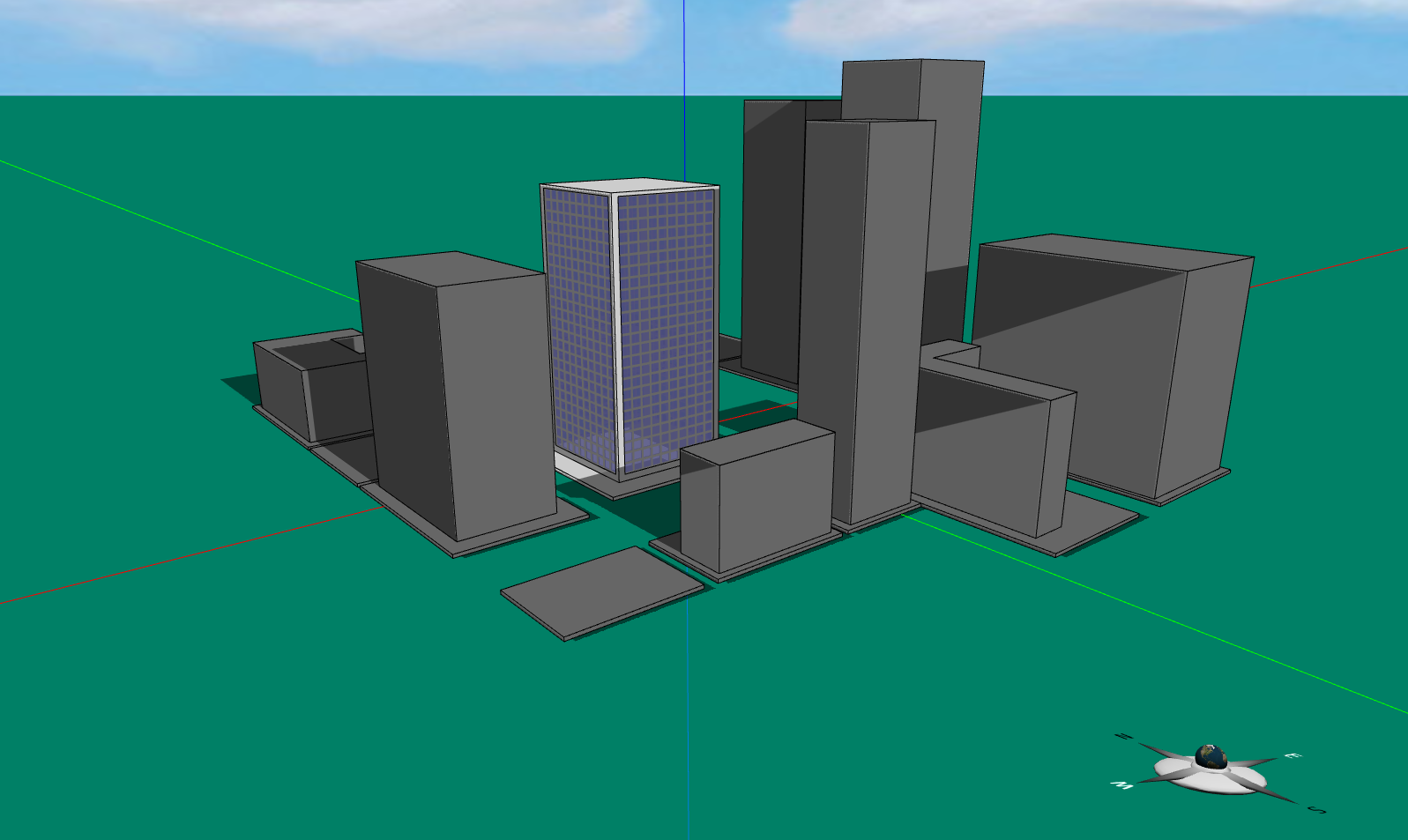

Let's see how the Octopus Algorithm finds multiple optima. Again, I

have tested the algorithm with simple sinusoidal functions and found that it

worked in those test cases. But I want to use a real-world example from

Energy3D to illustrate my points. This example is concerned with

determining the optimal orientation of a house, given that everything

else has been fixed. By manual search, I found that there are basically four different orientations

that could result in comparable energy efficiency, as shown in Figure 4.

|

| Fig. 6: "A fat octopus" vs. "a slim octopus." |

Now let's pick four different initial guesses and see which optimum

each "octopus" finds. Figure 5 shows the results. The graphs in the left

column show the normal distributions around the four initial guesses.

The graphs in the right column show the final solutions to which the Octopus

Algorithm converged. In this test case, the algorithm succeeded in

ensuring nearest guidance within the zone of proximal development. Why

is this important? Imagine if the student is experimenting with a

southwest orientation but hasn't quite figured out the optimal angle. An

algorithm that suggests that he or she should abandon the current line

of thinking and consider another orientation (say, southeast) could

misguide the student and is unacceptable. Once the student arrives at an optimal solution nearby, it may be

desirable to prompt him/her to explore alternative solutions by choosing

a different area to focus and repeat this process as needed. The

ability for the algorithm to detect the three other optimal solutions

simultaneously, known as multi-niche optimization, may not be essential.

|

| Fig. 7. A "fatter octopus" may be problematic. |

There is a practical problem, though. When we generate the normal

distribution of solution points around the initial guess, we have to

specify the standard deviation that represents the reach of the "tentacles"

(Figure 6). As illustrated by Figure 7, the larger the standard

deviation ("a fatter octopus"), the more likely the algorithm will find

more than one optima and may lose the nearest one as a result. In most cases, finding a

solution that is close enough may be good enough in terms of guidance. But if this weakness

becomes an issue, we can always reduce the standard deviation to search the neighborhood more carefully. The

downside is that it will slow down the optimization process, though.

In summary, the Octopus Algorithm that I have invented seems to be

able to accurately guide a designer to the nearest optimal solution in an

engineering design process. Unlike Paul the Octopus that relied on

supernatural forces (or did it?), the Octopus Algorithm is an AI technique that we create, control, and leverage. On a separate note, since some genetic algorithms also employ tournament selection like the

World Cup, perhaps Paul the Octopus was thinking like a genetic

algorithm (joke)? For the computer

scientists who happen to be reading this article, it may also add a new

method for multi-niche optimization besides fitness sharing and

probabilistic crowding.